In the previous blog we shared how overlay Equal Cost Multipath (ECMP) helps to distribute the web load when multiple Virtual Machines (VMs) with the same IP-X web service are hosted on different hypervisors.

In this blog we will increase the number of VMs on the hypervisors and learn how efficiently we can connect them.

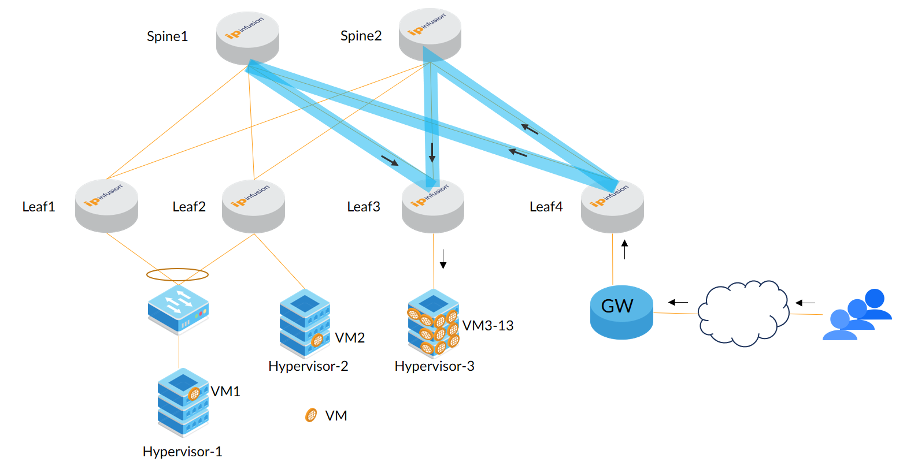

In the below diagram we can see that an increased number of VMs with IP-X are hosted on Hypervisor-3.

Figure 1: Multiple VMs hosting the same subnet

Here we need to understand how these VMs are connected to leaf devices. Each VM will have a loopback global/service IP (IP-X in this case) and one connected IP on the interface; using connected IP it establishes the EBGP connection with the leaf’s VRF BGP neighbor. After establishing the BGP session, the VM distributes its loopback IP (IP-X in this case) indicating its availability; in return the VM gets the default route from the leaf.

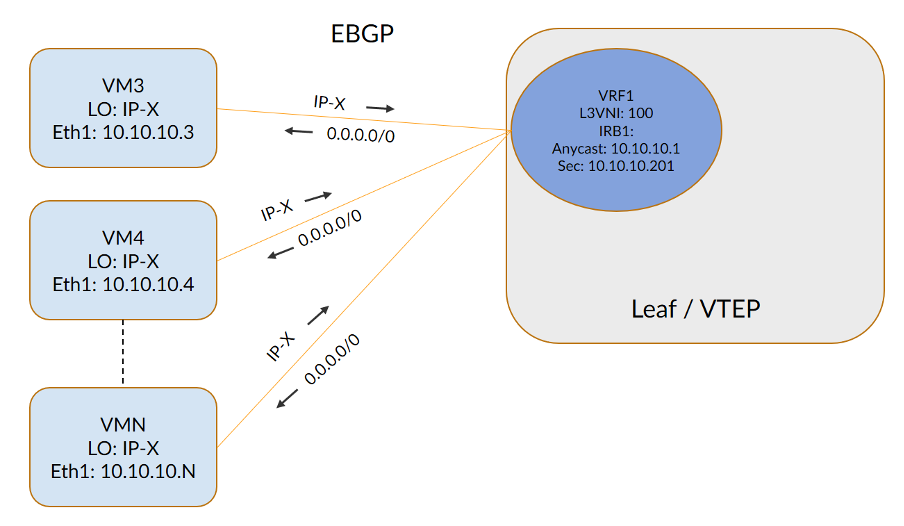

Figure 2: VMs connecting to leaf VRF using EBGP

Here in the leaf VRF routing table we can see the ECMP path to IP-X with as many paths as VMs announcing IP-X. In the VRF, 10.10.10.1 is used as anycast GW-IP, which will be sent as default route’s next-hop.

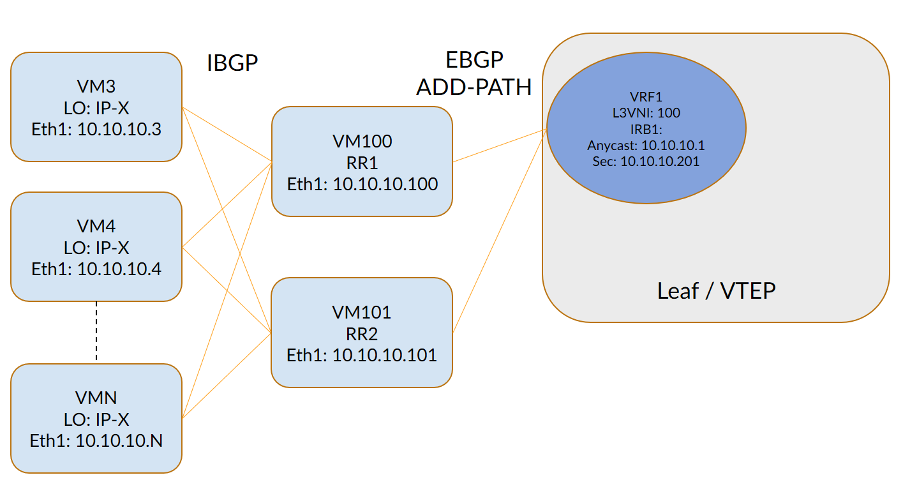

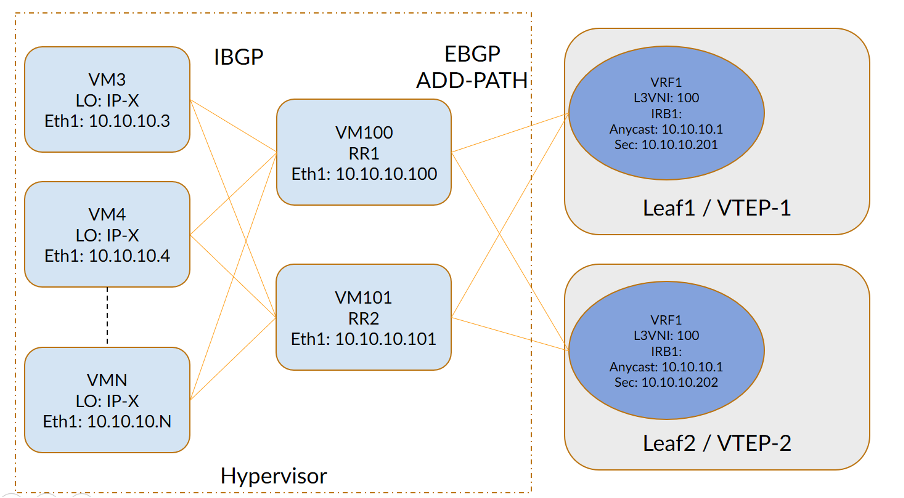

The challenge here is whenever a new VM is provisioned, BGP connections in the leaf VRF need to be modified, which might be tough for the orchestration. We can simplify this by introducing Route Reflector (RR) VMs within the same hypervisor. VMs will connect to RR VMs and these RR VMs will connect to leaf VRFs. In this way BGP configurations in the leaf VRF will remain the same irrespective of increase or decrease of service VMs.

Figure 3: VMs connecting to leaf VRF via RR

Note that in the above, the BGP Additional Paths (ADD-PATH) feature would be required with the EBGP context to preserve the next-hops. With the help of EBGP ADD-PATH, the leaf VRF will be able to form the ECMP paths for as many VMs advertised as IP-X with their next-hops.

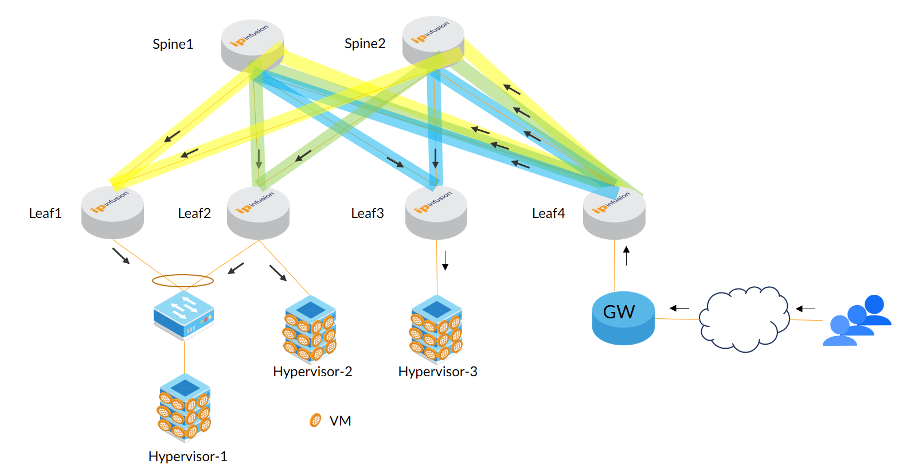

Now let us introduce some more redundancy. If we do the same in Hypervisor-1 described in the above topologies, then BGP connections would look like the below.

Figure 4: Hypervisor connecting to more than one leaf

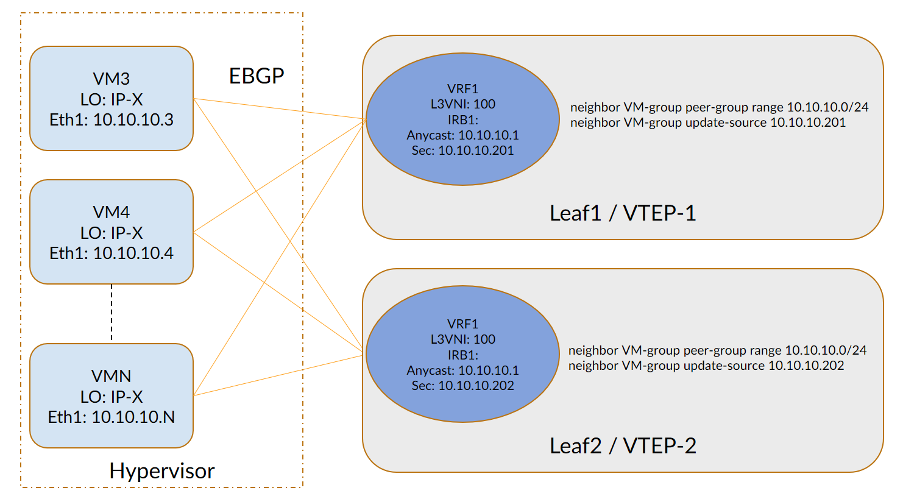

Is there any alternative to simplify these BGP connections? Yes, we can use dynamic BGP peering to rescue our config on the leaf nodes.

Figure 5: VMs connecting to leafs over dynamic peering configured on leaf devices

In the second method, the leaf VRF will experience more BGP connections to manage; whereas in the first method it’s offloaded to the RR VMs. The deployment needs to choose the right one as appropriate.

Note that BGP connections shown in both of the methods are logical; physical connections can go via the Top of Rack (TOR) switch and TOR will have a LAG connection towards two leafs with the same ESI on those interfaces.

These logical BGP connections are made with regular secondary IPs of the IRB interface, which would be unique in each leaf VRF within a VPN. Whereas Anycast IP acts as the Gateway (GW) IP for the default routes distributed from the leaf VRF, Anycast GW IP will have the associated Anycast MAC, hence any leaf that receives the traffic will be able to accept and route further.

With all these we can run multiple VMs on multiple hypervisors with the same IP-X hosted on them and overlay ECMP will be able to load share the traffic to them.

Figure 6: Load sharing with multiple VMs on multiple hypervisors

All the features discussed in this article are supported in OcNOS 6.3.

Contact us today to learn more about IP Infusion OcNOS-based networking software.

Prasanna Kumara S is a Technical Marketing Engineer for IP Infusion.